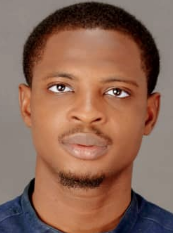

In Nigeria’s vast linguistic landscape, where over 500 languages vie for survival, a quiet revolution is underway. Temi Babalola and his team at Spitch are harnessing the transformative power of text-to-speech (TTS) technology to preserve the rich cultural heritage of Nigerian languages.

In this interview with TheCable’s AYOMIKUNLE DARAMOLA, Babalola spoke on the intricate relations between technology, culture, and ethics, and how his team is working tirelessly to ensure that the preservation of “endangered” Nigerian languages remains a testament to the country’s diverse cultural identity, rather than a mere footnote in the annals of technological progress.

TheCable: What inspired you to start a text-to-speech company?

Babalola: Well, we started building another product tailored towards students, and we discovered that the voice feature in that product wasn’t working properly due to a problem with speech generation and speech understanding. So the problem was that we were trying to build a tool for students to record lectures and summarise, and get a summary of their lectures in text format.

Advertisement

However, the problem was that the lecturers’ accents were quite different from what was used to train the large language models for it. And so, naturally, the next problem for us to solve emerged, and that was when we thought we could build something that helps AI understand African languages and African accents.

TheCable: Did you discover the problem during your time as a student at the university?

Babalola: I wasn’t in the university at the time. I graduated from the University of Ibadan in 2016. I have been out of the university for a while, but we have some customers at the University of Ibadan, the University of Lagos and some students using our products in Babcock.

Advertisement

However, we have some active student users. This was last year, and there were a few complaints about the conversion from audio to text, and we weren’t as functional.

We ran an experiment with it, and then we realised that this was a major problem and that if we wanted to solve the problem for students, we needed first to solve it. And that was why we started solving this.

TheCable: How does Spitch TTS technology differ from existing solutions?

Temi Babalola: The problem is not just in the ability to transcribe. The question is, can you do it in multiple languages? There are so many non-English speakers out there, and part of our identity is our language. If we are unable to solve this problem for our local languages, we run the risk of our languages phasing out.

Advertisement

So, today, you can speak Yoruba to ChatGPT, and it can understand it to a degree, but it’s usually not accurate in its transcription, and it doesn’t have an understanding of what you want or what your query is, or what you’re even saying.

Our goal is to increase the probability or the accuracy of the transcription and the accuracy of the generation. We’re not here to replace ChatGPT or solve intelligence.

I think the problem of intelligence itself should be separated from the input and output problems. The input speech and the output speech, that’s where we are. That’s the space within which we’re playing.

The middle area is where intelligence happens and I think Open AI, Anthropic and the others are doing a very good job at that.

Advertisement

But for our local languages, there are so many use cases where people who do not speak English face challenges integrating and interacting with AI systems and AI products. So, we’re here to bridge that gap, and that is where we come in and that’s the problem we are looking to solve.

TheCable: Can you elaborate on what you mean by the middle ground where intelligence happens?

Advertisement

Babalola: Today, we have models that are quite good at solving problems, thinking through solutions to a degree and coming up with answers. So you ask something from ChatGPT for example, and you get a response, and more often than not, it answers your questions.

But if I were to ask that question in Yoruba, the chances that it solves that problem or answers that question correctly are reduced. The intelligence is still there. It’s still a very smart tool and I would go as far as saying intelligence has no language. The in-between layer where computation happens is separate and is in a different construct from the language itself. So, the problem we are solving is if the input is Yoruba or Igbo.

Advertisement

So, building a solution at the input layer and the output layer is what we think would help bridge the gap between a lot of non-English speakers and AI solutions and AI providers.

TheCable: How many languages does Spitch support?

Advertisement

Babalola: We’re starting with Nigerian languages. We’ll be scaling to West African and some East African languages very soon. But at the moment, the only languages we provide support for are Yoruba, Igbo, Hausa and Nigerian-accented English. Pidgin English would be coming in a few months.

TheCable: What have been your biggest challenges concerning the languages and building the tool?

Babalola: I think the biggest challenges would be what I would classify as data problems and computing problems.

On the data side of things, as you know, languages are a low resource, meaning that there is not a lot of representation of these languages on the internet, which means that getting data might be a problem.

And the other issue is computing. It is a huge task because we live in a part of the world that doesn’t have a lot of commercial support when it comes to enterprises. Funding has been stressful and by extension, computers, which are a fundamental requirement for building AI solutions or core AI models have been a strain. Those are the two major challenges but we have a really strong, dedicated team, and we found areas to solve that problem.

Take, for example, our output for text-to-speech was driven by a model that did not require as much data as one would think. With just a few hours, we were able to produce something that made sense and audible enough for anybody to understand. I mean text-to-speech.

We found an algorithm hack to solve the data problem. I won’t say we have solved the data problem, but an algorithm hacks around the data problem. And for computing, we have quite a few optimisations going there too.

So, for example, we reduced our model sizes so much while trying to improve the accuracy such that we don’t need that much computation to actually train our models. We also leverage free resources where we can and that has gotten us so far.

TheCable: What funding have you received, not necessarily about money, but in terms of access to technological infrastructure?

Babalola: So, the Venture Capitalist (VC) ecosystem in Nigeria is kind of dry; so we haven’t got a lot of support from them. However, thanks to some partners like Microsoft. We got a few $1,000 in credits from Microsoft under the Microsoft for Startups programme and the same for AWS.

We use those credits to do model training as much as we can. As I mentioned earlier, we have a smart team, like a really smart team, and we found algorithmic hacks around reducing model sizes to ensure that they fit on hardware that we can afford so that we’re not spending too much.

TheCable: What is your target market? You mentioned education earlier, that’s the basis. What space have you grown into lately?

Babalola: We pivoted from Edtech; so we are no longer in the Edtech space. Technology like this has a lot of use cases. And if you were to ask, say Open AI, for example, what was their target market, they would have a lot of answers for that, I think.

We also have a lot of answers for that because we have seen many use cases. I will describe who we are selling our products to right now, and what we have seen our customers use it for as opposed to answering the question of target market.

So. our product is targeted at developers. From the log-in page, you can see that it is targeted at developers. We have a growing developer community around the product.

Therefore, we have very good developer documentation. We have an SDK in Python and we are working on a Node js SDK. Also for our API, we have a really good developer dashboard that we’re working on that will be released soon and targeted at developers.

So, the target market right now is developers, and we just want to put it in their hands and see what they do with it. Technology like this is something that you can’t restrict to one use case.

At some point, we would build out use cases or just a few use cases, and those would be our target products, that is, the products that we would eventually go to market with. But we have to start from what is even possible. What are the possibilities of this technology, and what do we see that developers are using it for?

By the way, we’re generating revenue already. So, if we decided to focus solely on developers and just let developers build whatever they want with it, I think eventually we’ll get to some profitability.

TheCable: Who are your main competitors that you follow closely?

Babalola: To the best of my knowledge, no one has done what we have done and very few people have tried or are trying. I know that everyone would find their path to some degree of success in this space.

It is an open ground; I mean competition is always welcome. But so far, to the best of my knowledge, as far as ultra-realistic audio for ultra-realistic speech for local languages, I have not seen anyone like that.

TheCable: Let’s talk about use cases. So, you are saying I can pick up one of Shakespeare’s books and translate it to Yoruba with your tool and have an ultra-realistic and poetic audio?

Babalola: Yes, you can hear it in Yoruba. You can choose from a male or female voice. We have cool voices. We have the big, more aggressive voice you can use. Yeah, so that experience is possible right now.

TheCable: Are there any ethical challenges you are facing around AI or AI-generated speech misuse?

Babalola: The data that we collect and use to train are publicly available and publicly accessible — data that is available that can be used to train and permission has been given to train. On model training, one of the things we’re working on is the ability to classify audio, and we can detect whether this was generated by us or not.

This would help clear the air regarding some audio files and some discrepancies that could come up where someone says, ‘This was generated by AI or was not.’ I mean being able to recognise and differentiate between AI-generated content and actual, real human content.

I think that is a very good start at approaching misuse of the product with this and that will be coming up soon. Also, nobody has come to say ‘My voice was used’. The data we are using for generating voices is one we have the license to use.

We have restrictions on voices. There are only four voices, and right now, nobody can bring a voice to say, copy this voice but we can do that. We are just trying to be careful about how we’re going to launch that feature.